After I’d had a chance to get my hands on and evaluate the Supermicro E300-9D-4CN8TP Superservers, it became clear pretty quickly that these servers would work nicely as hosts for my new lab. They were compatible with all of the requirements I’d laid out when I began work on this project, which were for the new lab environment to:

1.) Have enough resources to carry all of my personal development app and database servers, plus redundant capacity.

2.) Be cost-effective. (e.g., whenever it makes sense and is possible, choose decommissioned/used enterprise-grade gear, choose previous-generation components, choose less performant components if potential cost-savings are significant)

3.) Not take up a ton of (physical) space in my house.

4.) Not run up my electricity bill much more than is necessary.

Just looking at one of these Superservers next to one of my old ThinkServer towers, it was clear that these compact form-factor servers were going to go a long way toward meeting requirement number three.

For my purposes, and because I intend to evaluate VMware vSAN in my homelab, each of my hosts will require at least three storage devices:

- Storage for the hypervisor OS (ESXi)

- Storage for the vSAN caching tier

- Storage for the vSAN capacity tier

After some research and after referring to VMware’s documentation, as well as Paul Braren’s VMworld talk on vSAN storage device selection, I felt more or less confident that I could use a thumb drive and a M.2 device for the first and second storage device requirements respectively.

The choice for an M.2 device was not immediately obvious to me. I’d first thought to myself, “okay, this is cache so it needs to be as fast a possible, right? better look at NVMe PCIe devices”. However after some more research, I was second guessing my assumption. In short, here’s why: Power Loss Protection (PLP) and write endurance.

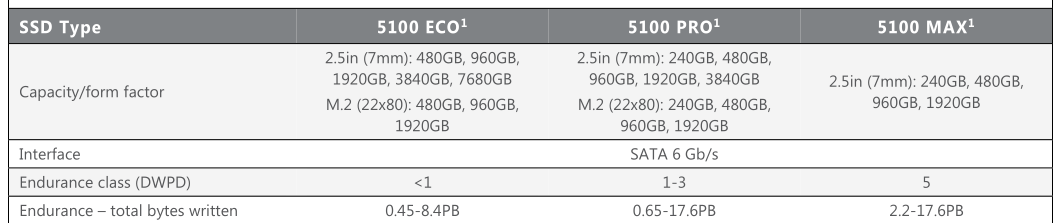

While researching, I saw a recurring theme (e.g., here on VMware’s vSAN community discussion board, and here) on the importance of selecting devices that had Power Loss Protection (PLP) capability for shared storage applications, including vSAN. After poring over product datasheets for several consumer-grade PCIe and SATA storage devices, I found PLP mentioned in only a couple datasheets. Maybe my search wasn’t thorough enough, but I couldn’t find any M.2 PCIe NVMe devices that were equipped with PLP and available on the used market. However I did find a handful of M.2 SATA device models that I could positively confirm as being PLP-capable.

UPDATE: Around a year or so after I completed my lab build, I started to see a couple M.2 form-factor models of enterprise-grade PCIe NVMe SSDs popping up on eBay. Keep your eyes peeled for Seagate’s Nytro SSDs and for Micron 7300 SSDs. They are both PLP-capable and are also worth considering for folks looking for M.2 form-factor PCIe devices for their vSAN caching tier!

If you’re going through the process of reviewing datasheets for storage devices, you may want to also look out for write endurance specs. Write endurance isn’t necessarily critically important for every application, but it’s another point to consider. It’s worth noting that the datasheet for one model of PLP-equipped SATA SSD featured a write endurance nearly double that of (what were, at the time) the best performing NVMe SSDs.

Now that I knew which devices would work, it was time to head to eBay. It wasn’t long before I found a good deal on a few Micron 5100 MAX SATA III solid-state drives, which also happen to be listed among the devices that VMware supports for use as vSAN cache devices (not a strict requirement for my homelab build, but definitely preferred).

Now that I’d solved for the first two storage devices, it was time to figure out what I was was going to do for each host’s vSAN capacity tier storage. As you can see, there’s only room for one 80mm M.2 device on the server motherboard and I had just filled that space. The E300-9D-4CN8TP motherboard has a few different ports that could be used for connecting one or more additional SATA devices, but there’s not really much room in the server chassis for anything else, except for maybe a single 2.5″ HDD or SSD.

To solve this problem and still adhere to my project requirements, it looked like I would need to locate my vSAN capacity devices externally. I cover the details of the solution I chose in my second post. Thank you for reading!