In part 1 of my homelab build series, I looked at the storage requirements for my homelab build project and began narrowing down possible choices for each type of storage device required in a vSAN cluster host.

Up to this point I’d made a decision for two of the three devices I needed for each host in the cluster:

Storage for the hypervisor OS (ESXi)Storage for the VSAN caching tier- Storage for the VSAN capacity tier

William Lam’s approach to this problem, as detailed in his blog post, was to take advantage of the server’s PCIe 3.0 x16 slot to add another M.2 storage device and satisfy the requirements for both devices that way. For a lean “proof of concept” vSAN cluster, building each host with a single cache device and a single capacity device is going to be optimal—vSAN capacity disk groups can be configured with a minimum of one and a maximum of seven storage devices.

Adding more than one capacity device to my hosts will make my lab take up more physical space and will increase power consumption, though it will eliminate a single storage device as a single point of failure and will enable each host’s capacity disk group to be made up of several less expensive, lower-capacity SSDs that still have power loss protection (PLP) capability. To me, this tradeoff seemed to better suit my requirements.

Though in order to enable my lab servers to connect to external storage, I’d first need to to install a host bus adapter (HBA) card in each one. I looked through VMware’s hardware compatibility guide first so that I could narrow down potential candidates for HBAs. While I was comparing and researching the handful of supported HBA devices, it looked like I could achieve an enormous cost savings for several components of my build if I was willing to commit to slower 6Gb/s SAS-2 storage devices vs. devices made to be compatible with the newer, faster 12Gb/s SAS-3 interface specification. I didn’t have much trouble making the choice to stick with SAS-2 devices (see requirement number two in my HLB Part 1 post!).

Once I’d made the decision to stick with SAS-2 devices, it looked like the right HBA for this application would be the LSI 9207-8e—and I was glad to see there’s no short supply of these on eBay.

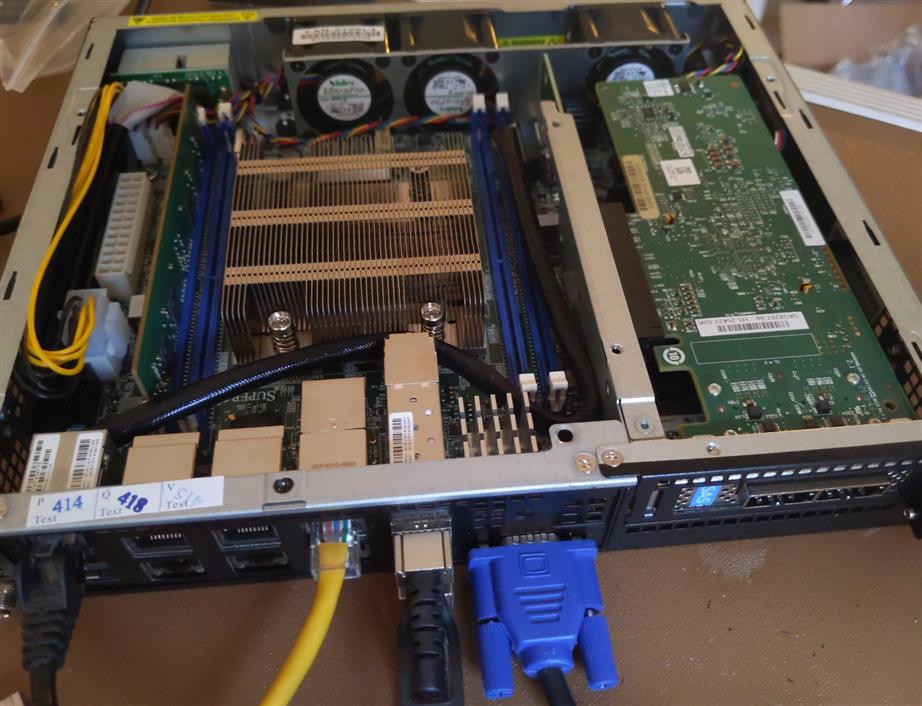

Once my HBA cards had arrived, I needed to install them into 90 degree angle brackets that would allow them to fit inside of the E300-9D-4CN8TP chassis.

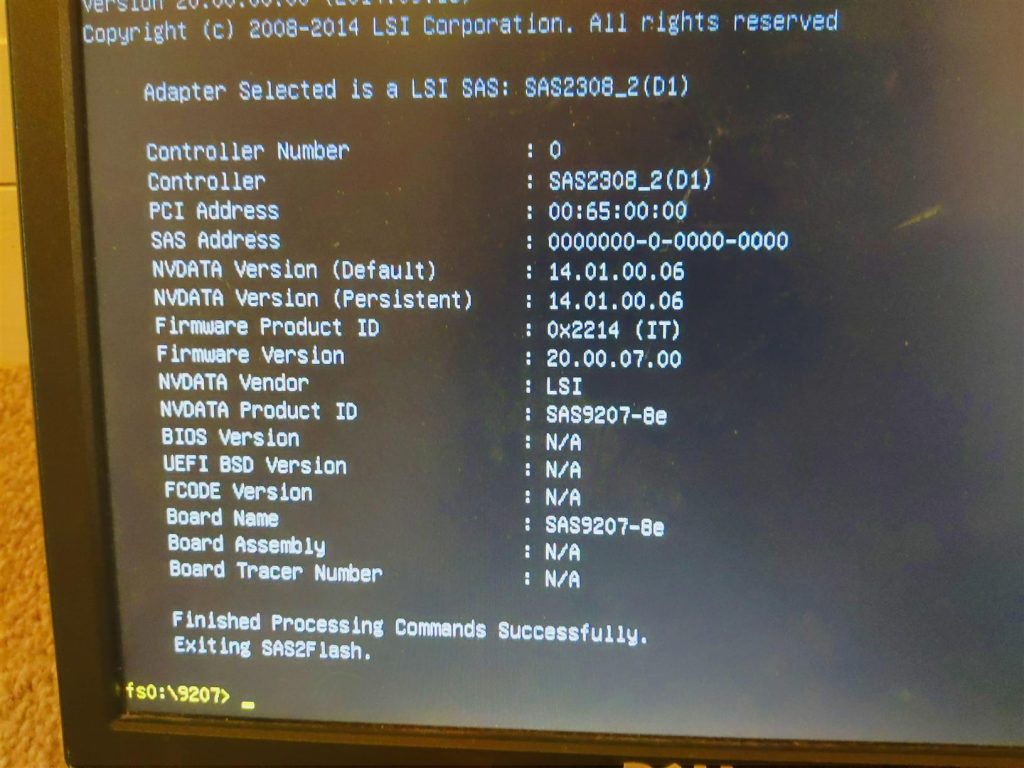

While researching HBAs, I noticed that some folks had experienced issues in getting their LSI2308-based devices (including the LSI 9207-8e) to play nice with ESXi and vSAN. I needed to make sure the firmware on my HBAs matched the LSI driver included in ESXi 6.x. I found this guide very helpful as I worked through the process of flashing the correct firmware to each card. I also went ahead and erased the BIOS from each HBA since I wouldn’t be booting from externally-connected storage devices—doing this shaves a couple minutes off of the server startup time.

Once the new HBAs were installed and flashed with the correct firmware, I was ready to move on to selecting and connecting external storage to my servers. More details on that stage of the lab build will appear in Part 3 of the series! Thanks for reading!